The Epic AI and Cosmos Reality Check: Your Customizations Just Became AI Liabilities

Every “improvement” you made to Epic is about to get stress-tested by artificial intelligence

At this year’s UGM, Epic showcased Cosmos with the kind of AI demonstrations that make healthcare executives and clinicians dream. Predictive ordering that anticipates what clinicians need. Clinical decision support that learns from billions of encounters. Workflow automation sourced from data generated by 300 million patients.

You left Madison convinced this would transform your organization. Your team started planning AI pilots. Your board asked when you’d see results.

But here’s what the Cosmos demos didn’t show: the thousands of “reasonable” customization decisions you made over the past just became mathematical noise in AI training models. You know these customizations. They are the ones that gave your analysts and informaticists little dopamine boluses because they were so slick.

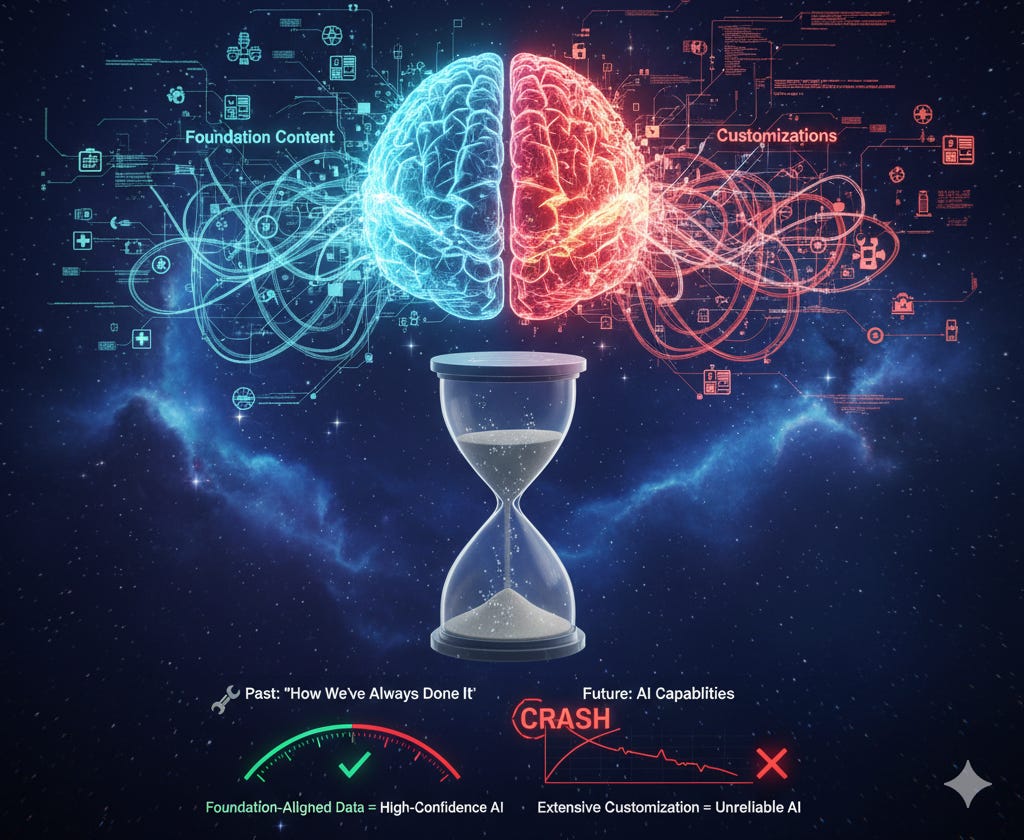

The problem is that every time you strayed from Foundation content to match “how we’ve always done it,” you were optimizing for yesterday’s workflows while inadvertently degrading tomorrow’s AI capabilities.

I know this because, in many ways, I was a “customizer in chief.” One of these decisions was our decision to customize ED chief complaints.

A Parable of Good Intentions

In 2013, during our transition from Meditech to Epic, we made what seemed like a perfectly rational decision. We copied our familiar library of chief complaints we used in Meditech instead of adopting Epic’s Foundation content. Our team knew our complaint categories. They worked. Why force people to learn new ones?

For years, this worked fine. We even created a dynamic Quicklists that was based on the chief complaint that functioned quite well. Clinicians got contextual suggestions. Workflows stayed smooth. Problem solved.

Except we hadn’t solved a problem. We had created a time bomb.

When we tried benchmarking against other Epic organizations, our data didn’t align. The Quicklist I was so proud of had brittle logic that broke with system updates. But these felt like manageable annoyances. It wasn’t enough to justify the disruption of starting over and moving to Epic’s Foundation.

Then I recently spoke with Epic’s Cosmos development team about predictive ordering models.

The conversation started with excitement about AI capabilities. The idea popped into my head that we could dynamically create an even more precise Quicklist using Cosmos and CoMET. Unfortunately, I had a sobering realization: our customized chief complaints weren’t just a local workflow preference anymore. They were creating dirty data that would make Cosmos predictions unreliable and potentially unusable.

The CoMET framework learns patterns from millions of standardized clinical encounters. When our “chest pain/angina” chief complaint doesn’t match with Epic Foundation’s “chest pain,” the AI can’t accurately predict what our physicians need. The mathematics are unforgiving.

This isn’t just about my chief complaint quandary. It’s a parable for thousands of similar decisions playing out across every Epic implementation.

The Customization Multiplier Effect

Think about your Epic implementation. How many times did your team make similar calls?

Created custom flowsheets because Foundation “wasn’t quite right”

Built proprietary Category Lists instead of using Foundation structures

Agreed to create a custom surgical ORP procedure record for a high volume surgeon

Created a Grouper de novo for a particular project even though you knew something similar already existed

Each modification was defensible. Each addressed real workflow friction. Each made your system work better for your users in that moment.

But each customization also created a small deviation from the data patterns Cosmos learned from. Individually, these deviations seem trivial. Collectively, they compound into mathematical chaos.

The Data Quality Equation

Here’s the reality of AI-driven healthcare: algorithms need consistency to recognize patterns. They don’t care that your customizations improved workflows in 2015. They only know your data doesn’t match the standardized inputs they trained on.

Think of it like fuel quality. An engine can run on gasoline, ideally with 90 octane, but performance suffers dramatically with inconsistent fuel. Your customizations aren’t giving you 90 octane data—they’re giving you 90 octane plus or minus 10 (or more).

The mathematics are unforgiving:

Foundation-aligned data = high-confidence AI predictions

Slight customization = degraded prediction accuracy

Extensive customization = unreliable or unusable AI outputs

Cosmos and AI general aren’t magic. These tools are pattern recognition at massive scale. When your patterns don’t match the training data, the predictions fail.

And we’re not just talking about order suggestions. As AI capabilities expand across clinical documentation, population health analytics, and operational optimization, every customization becomes an amplifier of uncertainty.

Your customized BP Flowsheet? Mathematical noise for clinical decision support AI. Your menagerie of similar but not quite identical Groupers? Inconsistent training data for population health. My custom chief complaints? Data poison for making ordering easier for my fellow ED physicians.

The customization debt accumulated over a decade is about to come due, with compound interest.

Why Foundation Matters More Than Ever

Epic’s Foundation System was designed for operational consistency. It made for easier upgrades, reduced maintenance burden, and improved interoperability. These benefits remain important.

But Foundation has become something more critical: the baseline for AI training data quality.

Organizations that stay close to Foundation will have cleaner inputs for Cosmos models. Their data aligns with the patterns these AI systems learned. Their predictions will be accurate, their recommendations relevant, their automation reliable.

Organizations with extensive customizations face a harder choice: accept degraded AI performance or invest in “Refuel” projects to realign with Foundation standards. The algorithms don’t compromise.

This connects to the potential of Cosmos’ virtuous cycle I described at UGM 2022: clinical workflows generate data, data flows through Epic’s structures, analytics provide insights, and insights improve workflows.

But this cycle only works when data maintains consistency across the entire Epic ecosystem: when Chronicles aligns with Caboodle structures, which align with Cosmos training sets, which circles back and aligns with Chronicles-based operational feedback loops.

Customizations break this alignment. They create friction at every transformation point. What seemed like local optimization becomes system-wide degradation in at AI scale.

The Strategic Audit You Need Now

Healthcare executives need to stop viewing AI as a magic wand that works regardless of data quality. The magic requires mathematical rigor and even more governance.

Organizations serious about AI-enabled healthcare need to audit their Epic customizations through a new lens. Not “what works today” but “what will work with AI capabilities arriving next quarter.”

Before implementing any future modification to Foundation content, ask:

Does this customization improve workflows enough to justify potential AI performance degradation?

Can we achieve the same clinical outcome using Foundation-aligned approaches?

What will the downstream Cosmos implications be in 12-24 months?

Are we optimizing for today’s muscle memory at the expense of tomorrow’s capabilities?

For existing customizations, the audit becomes more uncomfortable:

Which modifications were truly necessary versus “that’s how we’ve always done it”?

What would it cost to migrate back toward Foundation standards?

What’s the opportunity cost of degraded AI performance if we don’t?

To do this you need to understand the entire data lifecycle from Chronicles to Caboodle to Cosmos back to Chronicles and how all these connect with clinical outcomes and operational workflows.

This is not to say that one can’t customize. That sort of rigidity would be a silly as excessive customization. You need to strike a good balance and you need a team of analysts and informaticists who can expertly guide your organization where and how to make the customizations to minimize negative effects.

The Hidden Opportunity

Here’s what makes this moment particularly critical: most organizations saw the Cosmos demos at UGM and started planning AI initiatives without auditing their data governance foundation.

They’re building AI strategies on top of data structures that will undermine those very strategies.

The organizations that recognize this pattern now—that pause to assess their customization debt before rushing into AI pilots—will have a significant competitive long term advantage. They’ll invest in data cleanup before it becomes an emergency. They’ll design governance frameworks that balance local needs with AI requirements. They’ll make intentional choices rather than discover consequences through failed implementations.

The math is simple: cleaner data enables better AI, better AI enables better clinical decisions, better clinical decisions improve patient and operational outcomes. Customizations that seemed costless in 2015 now carry AI tax penalties that compound with every Cosmos release.

The Question That Matters

The question isn’t whether AI will transform healthcare. The question is whether your data governance decisions from the past decade will enable or undermine that transformation.

Those thousands of “reasonable” customizations you made? They’re about to get stress-tested by pattern recognition algorithms that don’t care about your workflows, only about mathematical consistency.

You can ignore this and discover the consequences when your Cosmos implementations underperform. Or you can audit your Foundation alignment now and make strategic decisions about which customizations are worth keeping and which need unwinding.

Your Chief Complaints, Groupers, Flowsheet Rows and SmartData elements from 2013 just became your foundational 2025 AI strategy.

The organizations that understand this early will capture the AI advantage. The ones that don’t will spend the next several years explaining why their Cosmos results don’t match the UGM demos.

Choose wisely.

John Lee is an emergency physician and Epic consultant who learned these lessons through direct implementation experience, including creating the exact problems he now helps organizations avoid. He posts frequently on LinkedIn.